Most likely PC programming couldn’t pass judgment on pictures the way we do? Appending numerical scores to specialized subtle elements would one say one is a thing, yet don’t we see with our souls and in addition our brains?

Evaluation of picture quality and feel has been a long-standing issue in picture handling and PC vision. While specialized quality appraisal manages to measure pixel-level debasements, for example, commotion, obscure, pressure antiques, and so forth., tasteful evaluation catches semantic level attributes related to feelings and excellence in pictures. As of late, profound convolutional neural systems (CNN’s) prepared with human-named information have been utilized to address the subjective idea of picture quality for particular classes of pictures, for example, scenes.

Although, these approaches can be restricted to their scope, as they regularly arrange pictures on two classes for low and prominent. Google’s new system predicts the dissemination of appraisals. This prompts the tip of the iceberg exact caliber prediction for the higher relationship of the ground to ratings and will be pertinent on general pictures.

Hossein Talebi, Software Engineer said, “In “NIMA: Neural Image Assessment” we introduce a deep CNN that is trained to predict which images a typical user would rate as looking good (technically) or attractive (aesthetically). NIMA relies on the success of state-of-the-art deep object recognition networks, building on their ability to understand general categories of objects despite many variations.”

“Our proposed network can be used to not only score images reliably and with high correlation to human perception, but also it is useful for a variety of labor-intensive and subjective tasks such as intelligent photo editing, optimizing visual quality for increased user engagement, or minimizing perceived visual errors in an imaging pipeline.”

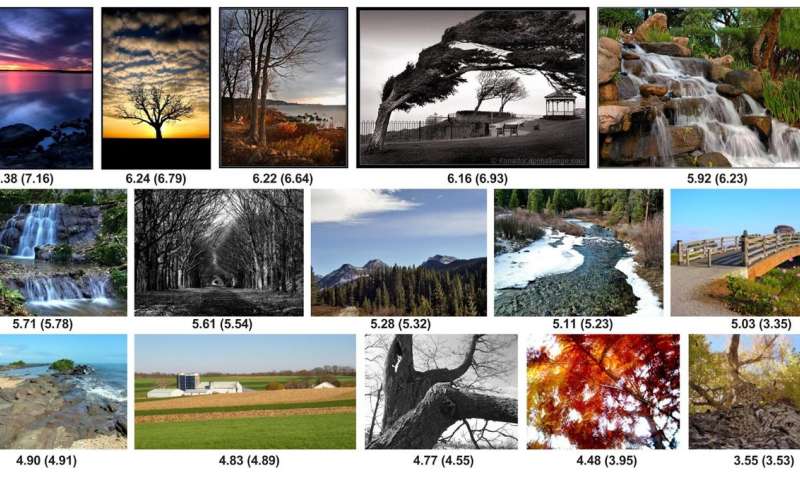

As opposed to classifying pictures a low/high score or regressing of the imply score, the NIMA model produces an appropriation about appraisals to whatever provided for a picture — with respect to a scale of 1 will 10, it assigns likelihoods with each of them could reasonably be expected scores. This may be more specific in line with how preparation information will be normally captured, also it turns out to be a preferred predictor from claiming human inclination The point when measured against other methodologies.

Different capacities of the NIMA vector score might afterward a chance to be used to rank photographs stylishly. A few test photographs from the extensive scale database to tasteful Visual analysis (AVA) dataset, Likewise positioned Eventually Tom’s perusing NIMA, are demonstrated The following. Each AVA photograph is scored by a normal from claiming 200 kin because of the opposition with photography contests. Following training, the tasteful positioning for these photographs Toward NIMA nearly matches those imply scores provided for by mankind’s raters.

Engineers noted, “Our work on NIMA suggests that quality assessment models based on machine learning may be capable of a wide range of useful functions. For instance, we may enable users to easily find the best pictures among many; or to even enable improved picture-taking with real-time feedback to the user.”

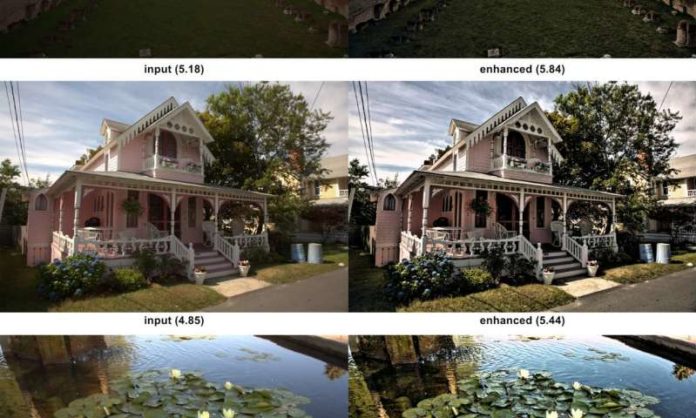

“On the post-processing side, these models may be used to guide enhancement operators to produce perceptually superior results. In a direct sense, the NIMA network (and others like it) can act as reasonable, though imperfect, proxies for human taste in photos and possibly videos. We’re excited to share these results, though we know that the quest to do better in understanding what quality and aesthetics mean is an ongoing challenge — one that will involve continuing retraining and testing of our models.”

Research paper: NIMA: Neural Image Assessment, arXiv:1709.05424 [cs.CV] arxiv.org/abs/1709.05424